This paper was written for my investigation into contrastive learning from images as part of my Computer Vision module at Cambridge. I implement the current state of the art architecture for the task and improve upon the current best implementation using DEIT by 2.65% by using a loss function I developed and implemented personally.

Abstract

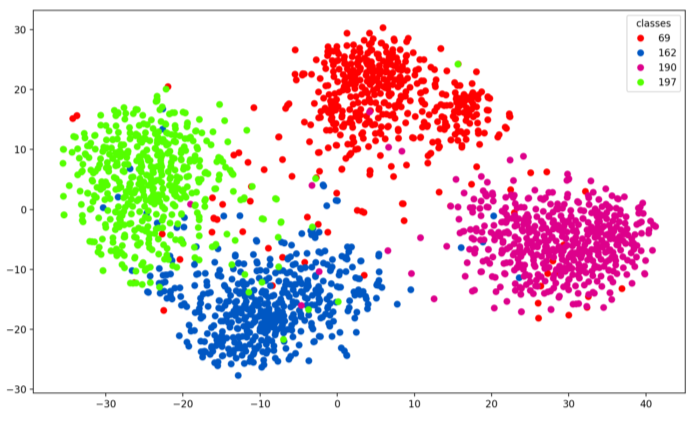

In this paper a novel contrastive loss function is presented, which can learn from contrasting image labels, the similarity and differences between images with 78.07% accuracy. The DEIT-B architecture is used and classification performance within 2% of the current state of the art on the TinyImageNet dataset has been verified. Our loss function is a supervised batched pairwise contrastive loss (SBPC), as computing the loss over the entire batch is far more computationally efficient. Results have been ratified during training and through a T-SNE visualisation of the produced embeddings. We conclude that supervised contrastive learning is an effective technique to learn from contrasting image labels, and demonstrate excellent generalisation performance to unseen datasets. However, note that models trained using this technique performed worse than those trained directly for classification...